Data

Streams

Live data processing provides you with a major competitive advantage. Do not solely dump your data into a lake and analyze it in batches. Instead, let us enable you to run online analytics and act instantaneously. We create live decision support and reporting systems. You will run powerful AI on your live data, observing effectiveness and efficiency at any point in time.

Based upon many years of experience with stream data systems and all sorts of inputs and outputs, as well as continuous training and in-house special software, we engineer your bespoke stream data solution.

Quick

for Data Streams

Quick receives and orchestrates your data streams. It runs and maintains your analytics and AI algorithms on your data streams. Quick exposes production API backends and connects your data streams to your applications and devices. Quick facilitates stream data quality and global consistency. Quick is easy to integrate, yet scalable and reliable.

Based upon our experience from many bespoke stream data projects, Quick is the quintessence engineered with passion by bakdata. It is built upon Apache Kafka and runs on Kubernetes, in your private, hybrid or public cloud.

Open

Source

We believe that a vendor-neutral offer provides our customers with the ability to deploy their own expertise and develop software at their own discretion. Together with you, we open source a number of our solutions:

kpops

KPOps can not only deploy a Kafka pipeline to a Kubernetes cluster, but also reset, clean or destroy it!

streams-explorer

Explore Data Pipelines in Apache Kafka.

streams-bootstrap

A micro-framework that lets you focus on writing Kafka Streams Apps.

kafka-large-message-serde

A Kafka Serde that reads and writes records from and to a blob storage, such as Amazon S3 and Azure Blob Storage, transparently.

kafka-profile-store

A Kafka Streams application that creates a queryable object store.

fluent-kafka-streams-tests

Write clean and concise tests for your Kafka Streams application.

Talks

2025

Scaling Semantic Search: Apache Kafka Meets Vector Databases

Presenters: Jakob Edding, Raphael Lachtner

This talk presents a performance-tuned Apache Kafka pipeline for generating embeddings on large-scale text data streams. To store embeddings, our implementation supports various vector databases, making it highly adaptable to many applications.

Text embeddings are fundamental for semantic search and recommendation, representing text in high-dimensional vector spaces for efficient similarity search using approximate k-nearest neighbors (kNN). By storing these embeddings and providing semantic search results given a query, vector databases are central to retrieval-augmented generation systems.

We present our Kafka pipeline for continuously embedding texts to enable semantic search on live data. We demonstrate its end-to-end implementation while addressing key technical challenges:

First, the pipeline performs text chunking to adhere to the maximum input sequence length of the embedding model. We use an optimized overlapping text chunking strategy to ensure that context is maintained across chunks.

Using HuggingFace’s Text Embeddings Inference (TEI) toolkit in a lightweight, containerized GPU environment, we achieve efficient, scalable text embedding computation. TEI supports a wide range of state-of-the-art embedding models.

As an alternative to relying on Kafka Streams, our solution implements optimized processing of small batches using Kafka consumer and producer client APIs, allowing batched API calls to TEI. Our benchmark results confirm this choice, indicating high efficiency with significantly improved throughput and reduced latency compared to other approaches.

Finally, Kafka Connect allows real-time ingestion into vector databases like Qdrant, Milvus, or Vespa, making embeddings instantly available for semantic search and recommendation.

With Kafka’s high-throughput streaming, optimized interactions with GPU-accelerated TEI, and efficient vector serialization, our pipeline achieves scalable embedding computation and ingestion into vector databases.

Watch presentation and slides

2024

Constructing Knowledge Graphs from Data Streams

Presenter: Jakob Edding

Knowledge Graphs are widely used to represent heterogeneous domain knowledge and provide an easy understanding of relationships between domain entities. Instead of traditional SQL databases, knowledge graphs are often stored and queried in graph databases such as Neo4j.

In this talk, we introduce a system we built on top of Apache Kafka for continuously aggregating entities and relationships harvested from different data streams. The aggregated edge stream is enriched with measures, such as entity co-occurrence, and then delivered efficiently to Neo4j using Kafka Connect.

Graph databases reach their limitations when aggregating and updating a large number of entities and relationships. We therefore frontload aggregation steps using stateful Kafka Streams applications. This battle-tested technology allows scalable aggregation of large data streams in near real-time.

With this, our approach furthermore allows implementing complex measures with Kafka Streams that are not yet supported in graph databases, for example, quantifying the regularity of entity co-occurrence in data streams over a long period of time.

We will demonstrate our system with real-world examples, such as mining taxi traffic in NYC. We focus on our Kafka Streams implementation to compute complex measures and discuss the learnings that lead to our design decisions.

Watch presentation and slides

Towards Global State in Kafka Streams

Presenter: Torben Meyer

Partitioned state is a fundamental building block of Kafka Streams for processing data in a distributed, asynchronous and data-parallel fashion. With this, high performance and scalability can be achieved, given a proper configuration with respect to state’s sizes and rebalance settings. However, besides partitioned state there is often a need for consistent, up-to-date, and complete reference data that is available on each replica, i.e., global state.

In this talk, we explore and compare different approaches of maintaining global state for Kafka Streams applications. We first investigate the features Kafka Streams provides out-of-the-box: Repartitioning, GlobalKTables and Interactive Queries. We then focus on integrating third-party systems into Kafka Streams, such as distributed in-memory caches. We illustrate all approaches based on an example scenario, where we combine ecommerce data streams with globally available stock and delivery data.

Finally, we demonstrate our coordination-free approach to build a consistent global state over time, across all replicas, based on a continuous reference data stream. For this, we rephrase the problem and restructure the global state to eliminate the need for coordination. Then, Kafka Streams applications can scale again independently with their partitioned state.

Watch presentation and slides

2023

Implementing Real-Time Analytics with Kafka Streams

Presenter: Ramin Gharib

Real-time analytics requires manifold query types, which, for example, perform custom aggregations over groups of events, such as ordered sequences of selected events that meet certain criteria on keys or respective values. In practice, there are several query types which are not yet supported out-of-the-box. Kafka Streams provides state stores, which enable maintaining arbitrary state. These state stores can be used to store data ready for real-time analytics. Interactive Queries facilitate to leverage application state from outside the streams application. With this, you can retrieve individual events or ranges of events as input for aggregation operations in real-time analytics.

As an example, we focus on order-preserving range queries. As one can read in the documentation, Kafka Streams’ Interactive Queries on ranges do not provide ordering guarantees. This may result in insufficient analytics and sorting large sets of events in main memory is not always feasible.

In our talk, we discuss different approaches and highlight an indexing strategy for guaranteeing the order of a range query. We will discuss the pros and cons and finally demonstrate a real-world example of our solution. Furthermore, we showcase how our approach can also be applied to other implementations of custom analytics queries.

Timely Auto-Scaling of Kafka Streams Pipelines with Remotely Connected APIs

Presenter: Torben Meyer

Calling APIs over the network from Kafka Streams is often a necessary evil: Although it can incur significant costs by blocking the processing and thus making our pipelines less reliable, we are sometimes forced to integrate business process-related microservices or machine learning models and other technology that does not pair well with the JVM. While we scale the Kafka Streams applications and the services in Kubernetes to avoid blocked pipelines, it is often too late, as the scaling traditionally relies on metrics like the consumer group lag or number of HTTP requests.

In this talk, we first give an overview of the caveats when integrating such services in Kafka Streams and basic approaches for mitigating those. Second, we present our solution for the timely scaling of complex Kafka Streams pipelines in conjunction with remotely connected APIs. In addition to the well-known metrics, we take further dimensions into account. Powered by Kafka Streams, we observe our data stream, extract metadata, aggregate statistics, and finally expose them as external metrics. We integrate this with auto-scaling frameworks such as KEDA to reliably scale our pipelines just in time.

2022

Streaming Updates through Complex Operations in Kafka Streams at Scale

Presenter: Victor Künstler

With Kafka Streams, you can build complex stream processing topologies, including Joins and Aggregations over data streams. Making these complex processing pipelines aware of updates (including deletions) often becomes difficult, especially for previously joined and aggregated data. Because of the sheer amount of data, re-aggregating or re-joining from scratch at some time to handle updates correctly is not desirable.

In practice, these updates play an important role in stream processing, for example, to continuously improve data quality, to ensure data privacy, or to handle late-arriving data. This talk explores how we efficiently handle these stream updates and deletions in consecutive joins with Kafka Streams. Furthermore, we present an optimization for the aggregate operation in Kafka Streams, leveraging state stores to handle updates in complex aggregates. We discuss the challenges we encountered running complex stream processing topologies on Kubernetes and explore the solution with hands-on experiments.

Finally, we demonstrate how splitting the stream processing topologies enables us to have more fine granular control over resource allocation and scalability of different consecutive processing steps. We show how this improves cost-efficiency through autoscaling and the overall manageability of such streaming pipelines.

A Kafka-based platform to process medical prescriptions of Germany’s health insurance system

Presenter: Torben Meyer

With the beginning of 2022, the German government introduced an electronic prescription format for medications, comprising everything from the prescription through the pharmacy dispense to the invoice. It is described by FHIR – the global standard for exchanging electronic health data. Together with spectrumK, a service company for Germany’s health insurers, we have built a platform on top of Apache Kafka and Kafka Streams that can process and approve prescriptions at large scale.

In this session, we present different aspects of the platform. We highlight the benefits of our approach – converting the complex FHIR schemas to Protobuf – compared to working directly with data in the FHIR format. We further showcase how we use Kafka Streams to integrate a multitude of sources and build complex profiles of master data. These profiles are then exposed through an interplay of Kafka, Protobuf and GraphQL and among others, requested during the approval process. This complex process includes a variety of microservices. We explain how we have developed an asynchronous and synchronous mode for the process, so that the platform can support orthogonal requirements. Finally, we share our learnings on how to auto-scale such a platform.

2021

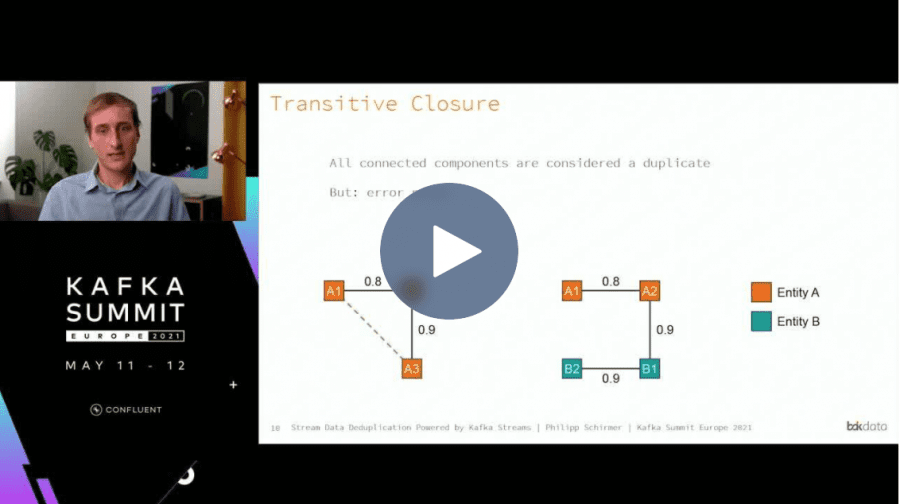

Stream Data Deduplication Powered by Kafka Streams

Presenter: Philipp Schirmer

Representations of data, e.g., describing news, persons or places, differ. Therefore, we need to identify duplicates, for example, if we want to stream deduplicated news from different sources into a sentiment classifier.

We built a system that collects data from different sources in a streaming fashion, aligns them to a global schema and then detects duplicates within the data stream without time window constraints. The challenge is not only to process newly published data without significant delay, but also to reprocess hundreds of millions existing messages, for example, after improving the similarity measure.

In this talk, we present our implementation for deduplication of data streams built on top of Kafka Streams. For this, we leverage Kafka APIs, namely state stores, and also use Kubernetes to auto-scale our application from 0 to a defined maximum. This allows us to process live data immediately and also reprocess all data from scratch within a reasonable amount of time.

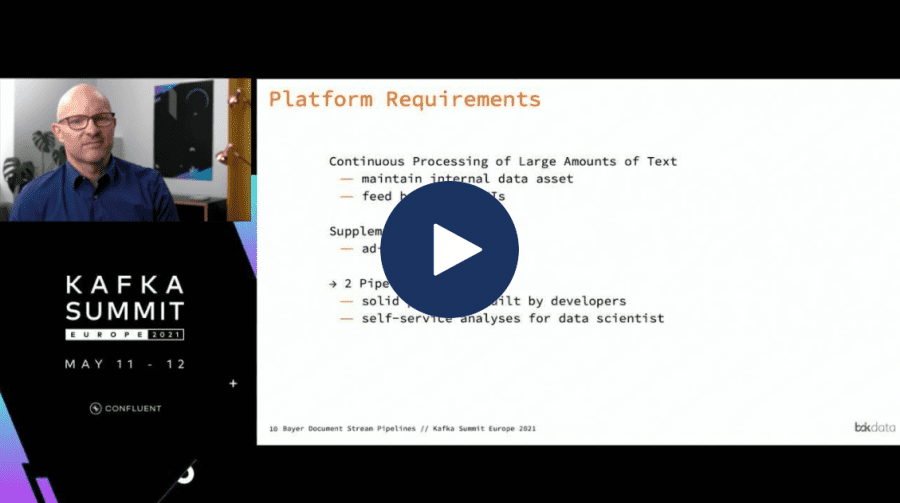

Bayer Document Stream Processing

Presenters: Dr. Astrid Rheinländer, Dr.-Ing. Christoph Böhm

Bayer selected Apache Kafka as the primary layer for a variety of document streams flowing through several text processing and enrichment steps. Every day, Bayer analyzes numerous documents including clinical trials, patents, reports, news, literature, etc. We will give an idea about the strategic importance, peek into future challenges and we will provide an end-to-end technical overview.

Throughout the discussion, we will look at challenges we handle in the platform and discuss respective solutions. Among others, we discuss our approach to continuously pull in data from a variety of external sources and how we harmonize different formats and schemas. We discuss large document processing and error handling, which allows efficient debugging while not blocking the pipeline.

Then, we take on the user’s perspective and demo the platform. One will learn how users create new document processing pipelines and how Bayer keeps track of the many running Kafka pipelines.

Cost-effective GraphQL Queries against Kafka Topics at scale

Presenter: Torben Meyer

In our projects, we often have to query the content of Kafka topics. To that end, we expose REST-APIs based on Kafka Streams’ interactive queries. However, this approach has some shortcomings. For example, users must stitch various APIs and results together. Furthermore, it can become costly as each topic’s API requires one or more JVMs. In this talk, we show how GraphQL can serve single queries involving multiple Kafka topics returning only data the user requested. Our approach eliminates unnecessary overhead and the lack of flexibility associated with traditional API-approaches on Kafka topics. We will also highlight different ways to reduce costs for computational resources such as CPU and RAM. First, we introduce more efficient queries through smart sub-query routing. Second, we build an ahead-of-time compiled, self-contained executable with GraalVM’s Native Image and compare it to the traditionally packaged JAR regarding memory usage and performance for different query workloads.

2020

End-to-end large messages processing with Kafka Streams & Kafka Connect

Presenter: Philipp Schirmer

There are several data streaming scenarios, where the messages are too large at the beginning when published to Kafka, or become too large during processing when puzzled together and pushed to the next Kafka topic or to another data system. Due to performance impacts, the default message size in Kafka is 1 MB. Although this limit can be increased, there will always be messages exceeding the configured limit and therefore are too large for Kafka.

Therefore, we implemented a lightweight and transparent approach to publish and process large messages with Kafka Streams and Kafka Connect. Messages exceeding a configurable maximum message size are stored on an external file system, such as Amazon S3. By using the available Kafka APIs, i.e., SerDes and Kafka Connect Converters, this process works transparently without changing any existing code. Our implementation works as a wrapper for actual serialization and deserialization and is thus suitable for any data format used with Kafka.

2019

Mining Stream Data mit Apache Kafka

Presenter: Dr.-Ing. Alexander Albrecht

Many Big Data projects deal with never ending data streams. In such streaming scenarios, it does not suffice to run a pre-trained model for making highly accurate predictions.

Also, most machine learning techniques require the complete input during the training phase and thus cannot be trained at high speed in real time.

In this talk, we demonstrate how to implement incremental Decision Tree learning in Kafka Streams. The presented techniques can be transferred to other stream learning approaches.